Crawl budget

A crawl budget is the number of pages a search engine, like Google, crawls on your website within a set period. It is determined by two main factors: crawl rate and crawl demand. Crawl rate is how often Google can crawl your site without overloading your server. Crawl demand depends on the importance and freshness of your content.

Large websites with many pages benefit more from a good crawl budget. If Google doesn't crawl all your pages, some might not get indexed. Small websites typically don't worry about crawl budget as much.

For example, if your website has 1,000 pages but Google crawls only 500, the other 500 may not appear in search results. To improve your crawl budget, ensure your website is fast, has no duplicate content, and regularly updates key pages.

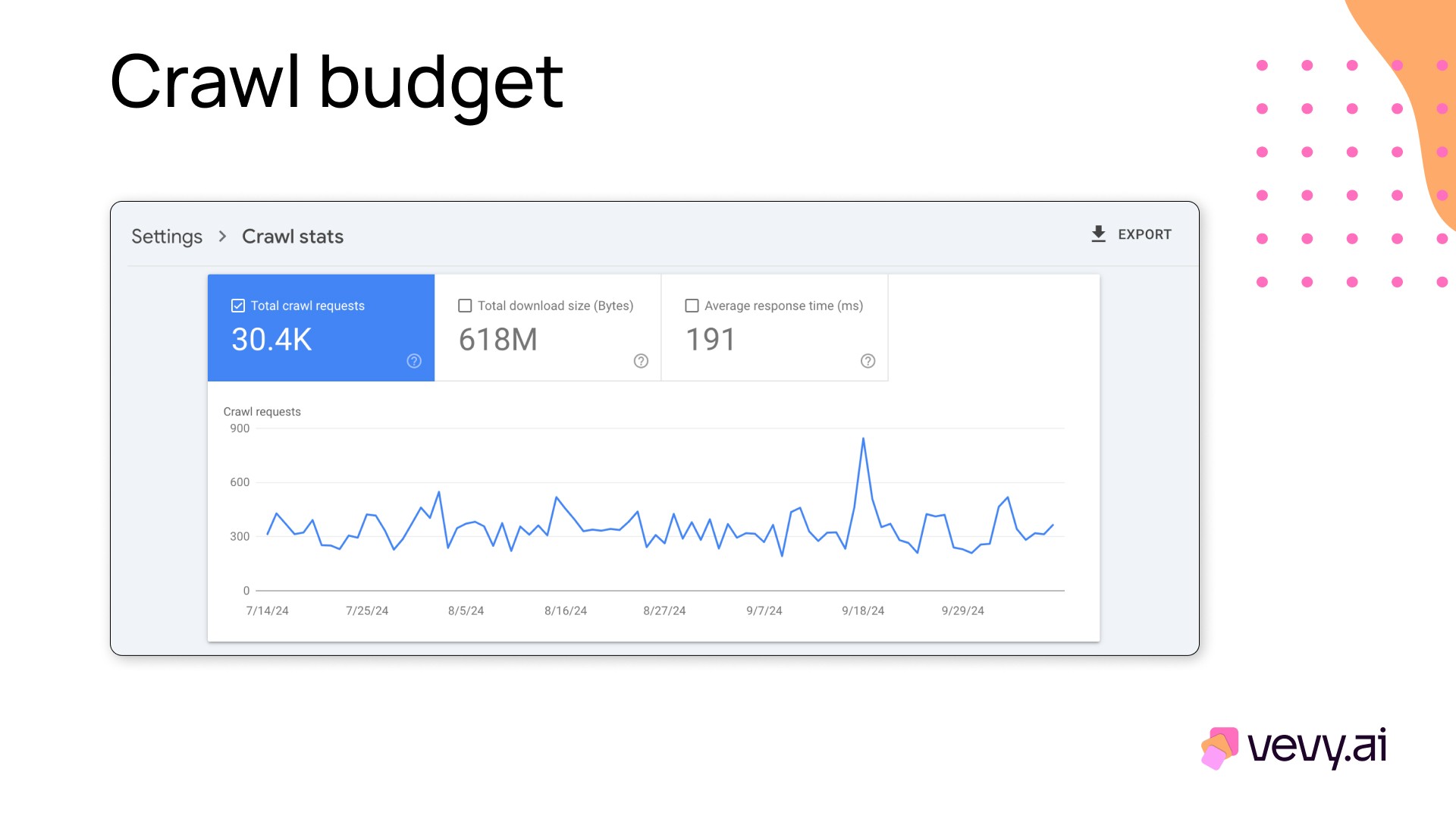

The image below displays the "Crawl stats" from Google Search Console. It summarizes crawl requests made by Google's web crawlers (like Googlebot) to your site over a period of time. Here's what the key elements represent:

Total crawl requests (30.4K): This shows the number of times Google's crawler accessed pages on your site (in this case, 30,400 requests) during the selected time period.

Total download size (618M): This indicates the total amount of data downloaded by the crawlers, in megabytes. In your case, the crawlers downloaded 618 MB of data while indexing your pages.

Average response time (191 ms): This shows how long it took, on average, for your server to respond to Google's requests (191 milliseconds). A lower response time indicates a faster server, which can positively impact how efficiently your site is crawled.

In the image below, you can observe fluctuations in crawl activity, where the spikes (higher number of requests) could represent times when Googlebot was more active on your site. These spikes might happen if your site has published new content, made major updates, or Google deems it necessary to refresh its indexed version of your site.

A well-optimized site with proper server response times and updated content can ensure efficient use of crawl budget and a healthy Googlebot crawling frequency.